List crowler – List Crawler: This guide delves into the fascinating world of automated data extraction from web pages, focusing on the powerful technique of list crawling. We’ll explore the intricacies of designing, building, and optimizing list crawlers, covering everything from fundamental concepts to advanced techniques for handling complex data structures and potential errors. Prepare to unlock the potential of efficient data retrieval from the vast expanse of online information.

We will examine the core components of a list crawler, the various types of lists it can target (ordered, unordered, nested), and effective strategies for navigating diverse HTML structures. Data extraction techniques, including handling inconsistencies and complex nested lists, will be detailed, along with robust error handling and performance optimization strategies for scalability and efficiency. Ethical considerations and practical applications across various industries will also be discussed.

Understanding “List Crawler” Functionality: List Crowler

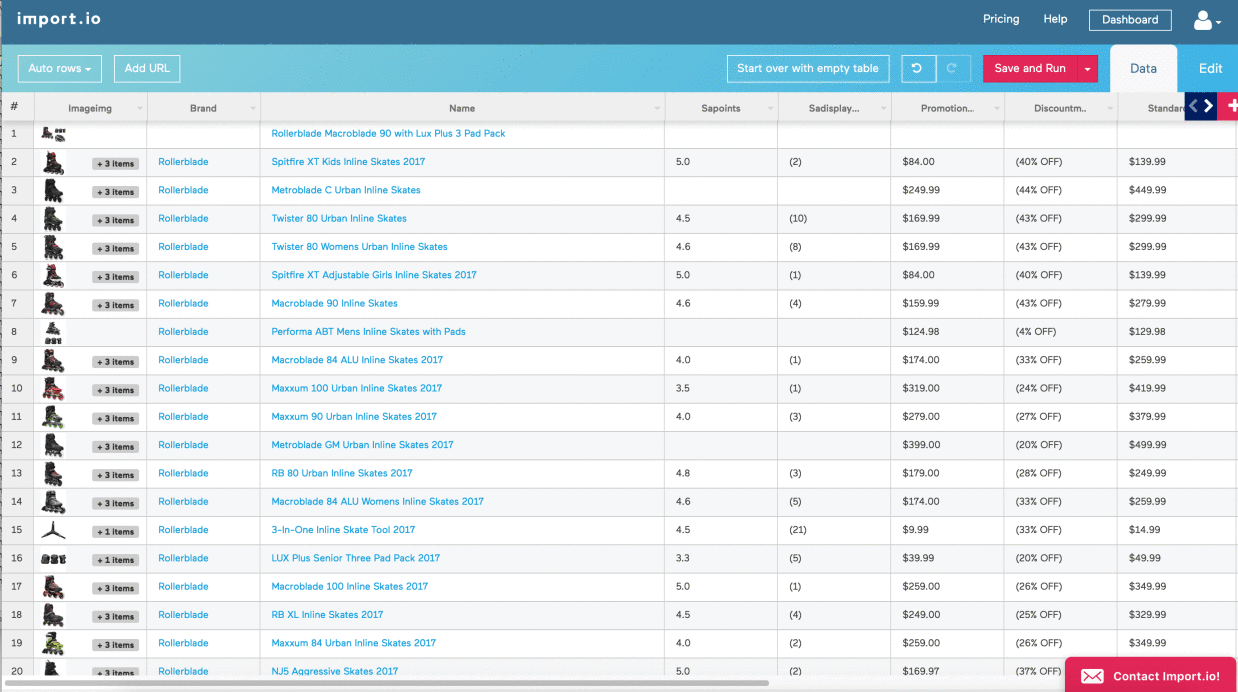

A list crawler is a specialized web crawler designed to extract data specifically from lists found within web pages. Unlike general-purpose crawlers that might process entire page content, a list crawler focuses its efforts on identifying and extracting information organized in list formats, offering a more targeted approach to data collection. This efficiency makes it particularly useful for tasks like price comparison, product aggregation, or gathering contact information.List crawlers are valuable tools for efficiently extracting structured data from websites.

They streamline the process of collecting information presented in lists, a common format on many websites. This allows for the automated creation of datasets, which can then be used for various applications, from market research to lead generation.

Core Components of a List Crawler

A typical list crawler comprises several key components working in concert. These include a URL fetcher, responsible for retrieving web pages; an HTML parser, to dissect the page’s structure and identify lists; a list extractor, to isolate list items; and a data storage mechanism, such as a database or file, to save the extracted data. Robust error handling and mechanisms to manage crawling speed and politeness are also crucial components.

Types of Lists Targeted by List Crawlers, List crowler

List crawlers are capable of handling diverse list formats. They can target ordered lists (

- ), indicated by numbered items; unordered lists (

- Import necessary libraries: This includes libraries like `requests` for fetching web pages and `Beautiful Soup` for parsing HTML.

- Define target URLs: Specify the websites or web pages to crawl. This might involve a single URL or a list of URLs.

- Fetch web pages: Use the `requests` library to retrieve the HTML content of each target URL.

- Parse HTML: Employ `Beautiful Soup` to parse the HTML, creating a navigable tree structure.

- Identify lists: Use `Beautiful Soup`’s methods to locate `

- ` and `

Examine how craigslist waco can boost performance in your area.

- ` tags, or use more sophisticated techniques to identify lists based on structural patterns if standard tags are not used.

- Extract list items: Extract the text content of each list item within the identified lists.

- Store extracted data: Save the extracted data into a structured format such as a CSV file or a database.

- Handle errors: Implement error handling to gracefully manage situations like network issues or invalid HTML.

- E-commerce Data: Product names, prices, descriptions, reviews, ratings, availability, and specifications.

- Real Estate Data: Property listings, addresses, prices, features, and contact information.

- Job Postings: Job titles, descriptions, requirements, locations, and application links.

- News Articles: Headlines, summaries, publication dates, and author information (from websites with structured news lists).

- Research Data: Citations, publication details, author affiliations (from academic databases presenting data in lists).

- E-commerce: Price comparison websites, market research, inventory management, competitor analysis.

- Real Estate: Property valuation models, market analysis, lead generation, property search engines.

- Recruitment: Job aggregators, talent acquisition, candidate screening, market analysis of skills demand.

- Research: Academic literature review, citation analysis, data aggregation for research studies, trend identification.

- Finance: Financial news aggregation, market data collection, risk assessment (with appropriate data sources and legal compliance).

- Lists (Arrays): Simple lists are often used to store sequences of extracted items, particularly when the order of items is significant.

- Dictionaries (Hash Maps): Dictionaries are particularly useful for storing data with key-value pairs. This is ideal when each list item has multiple attributes (e.g., a product name, price, and description).

- Custom Objects/Classes: For complex data structures, custom classes can be defined to represent each list item, encapsulating its attributes and methods for data manipulation.

- Relational Database Tables: When dealing with large datasets or complex relationships between data points, relational databases provide efficient storage and retrieval mechanisms.

- ), marked by bullets or other symbols; and nested lists, where lists are embedded within other lists, creating a hierarchical structure. The ability to navigate and extract data from these varied structures is a key feature of a sophisticated list crawler.

Handling Various HTML Structures Containing Lists

The complexity of handling different HTML structures is a significant challenge. A robust list crawler must be able to handle variations in HTML tags, attributes, and CSS styling. For example, a list might not be explicitly marked with `

- ` or `

- ` tags but instead styled using CSS to visually resemble a list. The crawler needs to account for these variations and extract data reliably regardless of the underlying HTML structure. It might use heuristics, such as detecting patterns of similar formatting or element structures, to identify lists even when they deviate from standard HTML conventions.

Building a Simple List Crawler Using Python

Building a simple list crawler involves a step-by-step process:

A basic Python code snippet illustrating list item extraction using Beautiful Soup might look like this:

import requests from bs4 import BeautifulSoup # ... (fetch HTML using requests) soup = BeautifulSoup(html_content, 'html.parser') for ul in soup.find_all('ul'): for li in ul.find_all('li'): print(li.text)

Handling Errors and Edge Cases

A robust list crawler must anticipate and gracefully handle various errors and unexpected situations. Failing to do so can lead to incomplete data, inaccurate results, and even program crashes. This section details strategies for mitigating these issues and building a more resilient crawler. Effective error handling ensures the crawler’s reliability and prevents disruptions to its operation.

List crawlers frequently encounter malformed or broken HTML, resulting from poor website design, dynamic content loading, or server-side errors. Furthermore, network issues, timeouts, and unexpected website changes can all contribute to challenges. Implementing robust error handling is crucial for maintaining the crawler’s stability and data integrity. This involves anticipating potential problems, implementing appropriate checks, and providing informative error messages to aid debugging.

Strategies for Handling Malformed HTML

Dealing with broken or malformed HTML requires a multi-pronged approach. Employing well-structured exception handling is paramount. Libraries like Beautiful Soup in Python provide powerful tools for parsing HTML, even in the presence of errors. These libraries often offer features to gracefully handle malformed tags, missing attributes, and other common irregularities. Furthermore, using regular expressions for targeted extraction of data can help bypass problematic sections of the HTML.

Finally, incorporating retry mechanisms with exponential backoff can help overcome temporary network issues or server-side problems that might lead to malformed responses.

Potential Errors and Exceptions

A list crawler might encounter various errors. Network-related errors include timeouts, connection resets, and DNS resolution failures. HTML parsing errors stem from malformed HTML, missing tags, or encoding issues. Website-specific errors could be due to rate limiting, access restrictions (robots.txt), or changes in the website structure. Internal errors might include memory exceptions or unexpected data types.

Implementing Robust Error Handling and Recovery

Robust error handling involves a combination of techniques. Try-except blocks (or similar constructs in other languages) are essential for catching and handling exceptions. Logging errors with timestamps and relevant context aids debugging and monitoring. Retry mechanisms with exponential backoff strategies help overcome transient network problems. Implementing circuit breakers can prevent repeated attempts to access unresponsive resources.

Regular expressions can be used to extract data even from partially malformed HTML. Finally, comprehensive unit and integration testing ensures the crawler’s resilience to various error conditions.

Examples of Error Messages and Their Interpretations

Understanding error messages is crucial for diagnosing and resolving issues. The following table provides examples of common errors, their causes, and possible solutions.

| Error Type | Description | Cause | Solution |

|---|---|---|---|

| HTTPError 404 | Not Found | The requested URL does not exist. | Verify the URL’s accuracy. Check for typos or outdated links. |

| HTTPError 500 | Internal Server Error | A problem occurred on the server. | Retry the request after a delay. Check the website’s status. |

| URLError | Connection Error | Network connectivity issues or DNS resolution failure. | Check network connection. Verify DNS settings. |

| ParserError | Invalid HTML | Malformed or incomplete HTML structure. | Use a robust HTML parser that handles malformed HTML gracefully. Consider using regular expressions for targeted data extraction. |

| TimeoutError | Request timed out | The server did not respond within the allotted time. | Increase the timeout value. Check server responsiveness. |

List Crawler Applications and Use Cases

List crawlers, as powerful web scraping tools, offer a wide array of applications across diverse industries. Their ability to efficiently extract structured data from lists makes them invaluable for automating data collection tasks that would otherwise be extremely time-consuming and labor-intensive. This section will explore several key applications and use cases, highlighting the types of data extracted and the ethical considerations involved.

The core functionality of a list crawler lies in its capacity to identify and extract data from list-formatted elements on web pages. This data can range from simple textual information to complex structured datasets, depending on the website’s structure and the crawler’s design. The versatility of this approach makes it adaptable to a variety of scenarios.

Web Scraping Scenarios

List crawlers are exceptionally well-suited for web scraping tasks involving structured data presented in lists. For instance, extracting product information (name, price, description) from an e-commerce website’s product catalog, or gathering contact details (name, email, phone number) from a company directory. The crawler’s ability to parse HTML and identify list elements allows for the systematic extraction of this information, significantly reducing the time and effort required for manual data entry.

This automated approach is crucial for businesses seeking to analyze market trends, monitor competitor pricing, or update their own databases.

Types of Extracted Data

The diversity of data extractable using list crawlers is considerable. The following examples illustrate the breadth of their capabilities:

Applications Across Industries

The applicability of list crawlers extends to numerous sectors. Their use cases are varied and impactful:

Ethical Considerations and Legal Implications

Employing list crawlers necessitates a strong awareness of ethical considerations and legal implications. Respecting website terms of service, adhering to robots.txt directives, and avoiding overloading target servers are crucial. Furthermore, understanding and complying with data privacy regulations (like GDPR and CCPA) is paramount, especially when handling personally identifiable information. Unauthorized scraping can lead to legal repercussions, including cease-and-desist letters and lawsuits.

Responsible use involves obtaining explicit permission whenever possible, limiting data collection to publicly accessible information, and implementing measures to protect user privacy.

Visual Representation of a List Crawler’s Workflow

Understanding the inner workings of a list crawler is best achieved through visualizing its data flow and operational stages. This section provides a detailed description of the process, from initial crawling to final data storage.

A list crawler systematically extracts information from a series of web pages containing lists. This process involves several distinct phases, each crucial for successful data retrieval and management. The efficiency and accuracy of the entire operation depend heavily on the smooth execution of each stage.

Data Flow within a List Crawler

The data flow in a list crawler follows a sequential pattern. It begins with identifying target URLs, proceeds through the stages of crawling, parsing, and finally, data storage. Each step utilizes specific techniques and data structures to ensure the integrity and organization of the extracted information. The entire process can be represented as a pipeline where the output of one stage serves as the input for the next.

The process can be summarized as follows: Target URLs → Crawling → Parsing → Data Storage. Each arrow represents a transformation of the data.

Stages of a List Crawler’s Operation

The operation of a list crawler can be broken down into three primary stages: crawling, parsing, and data storage.

Crawling involves systematically retrieving the content of the target web pages. This is typically achieved using libraries like Scrapy or Beautiful Soup in Python, which handle the complexities of HTTP requests, redirects, and robots.txt compliance. The crawler follows links from an initial set of URLs, respecting website rules and limitations to avoid overloading the server.

Parsing is the process of extracting relevant information from the retrieved HTML content. This involves using techniques like regular expressions or HTML parsing libraries to identify and isolate the specific list items. The extracted data is then cleaned and formatted to ensure consistency and accuracy. For example, removing extra whitespace or standardizing date formats.

Data Storage is the final step, where the extracted information is saved in a structured format. This could involve storing the data in a relational database (like MySQL or PostgreSQL), a NoSQL database (like MongoDB), a CSV file, or even a JSON file, depending on the specific requirements of the application. The choice of storage method impacts data accessibility, scalability, and querying capabilities.

Data Structures Used in List Crawlers

The efficient management of extracted data relies heavily on appropriate data structures. The choice of data structure depends on the nature of the data and the intended application.

Commonly used data structures include:

For example, if the crawler extracts product information from an e-commerce website, each product might be represented as a dictionary with keys like “name,” “price,” “description,” and “URL.” Alternatively, a custom class could be created to represent a product, making the code more organized and readable.

Mastering list crawlers opens doors to efficient data extraction from the web. This comprehensive guide has equipped you with the knowledge to design, build, and optimize these powerful tools, from understanding fundamental components and data extraction techniques to implementing robust error handling and scaling for large datasets. Remember to always prioritize ethical considerations and legal compliance when utilizing list crawlers.

By applying the principles Artikeld here, you can harness the power of automated data extraction for various applications, significantly improving efficiency and data analysis capabilities.