List Rawler: This exploration delves into the multifaceted world of automated list data extraction, examining its technical intricacies, legal implications, and diverse applications across various industries. We’ll navigate the complexities of designing efficient list crawlers, addressing challenges like data extraction techniques, error handling, and ethical considerations. This journey will illuminate the potential and pitfalls of harnessing the power of list crawlers for insightful data analysis and informed decision-making.

From understanding the fundamental concepts of list crawling to exploring its practical applications in e-commerce and research, we will cover the entire spectrum. We’ll discuss the legal and ethical boundaries surrounding data scraping and offer best practices for responsible data collection. This comprehensive overview aims to provide a balanced perspective, empowering you to utilize list crawlers effectively and responsibly.

Understanding “List Rawler”

The term “list rawler” is not a standard or established term in common usage or technical dictionaries. It appears to be a neologism, a newly coined word or phrase. Therefore, understanding its meaning requires examining its constituent parts and inferring potential interpretations based on context. The most likely interpretation involves a combination of “list” and “crawler,” suggesting a program or process that systematically extracts information from lists.The term likely refers to a type of web scraping or data extraction tool specifically designed to target and process lists found on websites or within documents.

It implies a more focused approach than a general web crawler, which might traverse entire websites indiscriminately. A list rawler would prioritize extracting data organized in list formats, such as bulleted lists, numbered lists, or tabular data.

Possible Contexts for “List Rawler”

The context in which “list rawler” is used significantly influences its interpretation. For example, it could be used within the following contexts:

In the field of web scraping, a list rawler could be a specialized tool developed for efficient extraction of data from online directories, product catalogs, or any website heavily reliant on list-based information architecture. Imagine a real estate website with listings organized as a series of tables; a list rawler could automate the process of collecting key details from each property listing.

This would be significantly faster and more efficient than manual data entry.

Within the context of data analysis, a list rawler might refer to a custom script or program written to process internally generated lists. For example, a company might use a list rawler to extract specific information from internal databases organized in list formats for reporting or analysis purposes. This could involve extracting order numbers, customer details, or product specifications from internal databases.

In academic research, particularly in fields like computational linguistics or natural language processing, a list rawler could be a component of a larger system designed to analyze textual data. It might be used to extract lists of s, citations, or other relevant information from research papers or other textual sources. Consider a project focused on identifying trends in research publications; a list rawler could extract all s from each publication for frequency analysis.

Implications of Using “List Rawler”

The implications of using the term “list rawler” depend heavily on the audience and the context. Using it in a technical discussion with software engineers would likely be understood, while using it in a non-technical setting might require further explanation. Incorrect or inappropriate usage could lead to miscommunication. Moreover, the legal implications must be considered. Scraping data from websites without permission could be a violation of terms of service or copyright laws.

Always ensure adherence to the website’s robots.txt file and respect intellectual property rights.

Synonyms and Alternative Phrases

Several alternative phrases could convey a similar meaning to “list rawler,” depending on the context. These include: “list extractor,” “list scraper,” “structured data extractor,” “data miner (for lists),” or simply “list processing script.” The choice of the most appropriate synonym depends on the specific application and the technical level of the audience.

Technical Aspects of List Crawling: List Rawler

List crawling, while seemingly straightforward, involves a complex interplay of techniques and considerations to ensure efficiency, robustness, and accuracy. This section delves into the technical intricacies of designing, building, and maintaining a list crawler.

System Architecture for a List Crawler

A hypothetical list crawler system can be structured in a modular fashion. The core components include a scheduler responsible for managing crawling tasks and prioritizing targets; a web fetcher to retrieve HTML content from URLs; a parser to extract relevant list data based on predefined rules or machine learning models; a data cleaner to handle inconsistencies and errors; and a data storage component (e.g., database) to persist the extracted information.

These components interact through a well-defined API, allowing for flexible configuration and scalability. For instance, the scheduler might use a priority queue to manage URLs based on factors such as domain authority or freshness of content. The web fetcher could employ techniques like rotating proxies and user agents to avoid being blocked by websites. The parser would leverage regular expressions or more sophisticated techniques like natural language processing (NLP) depending on the complexity of the target lists.

Challenges in Building a Robust and Efficient List Crawler, List rawler

Building a robust and efficient list crawler presents several challenges. Handling dynamic websites with frequently changing structures requires adaptable parsing techniques. Websites often employ anti-scraping measures, such as CAPTCHAs and rate limiting, which necessitate sophisticated bypass strategies. The sheer volume of data extracted necessitates efficient storage and processing mechanisms. Maintaining the crawler’s compliance with robots.txt and respecting website terms of service is crucial to avoid legal repercussions.

Furthermore, dealing with various data formats and encoding schemes requires robust error handling and data validation procedures. For example, a website might change its HTML structure unexpectedly, rendering the existing parsing rules ineffective. This necessitates a mechanism to detect such changes and adapt accordingly, perhaps through automated learning or manual intervention.

Data Extraction Techniques

Several techniques exist for extracting data from lists. Regular expressions provide a powerful yet flexible approach for pattern matching, suitable for structured lists. However, they can become complex and brittle when dealing with unstructured or inconsistently formatted lists. Web scraping libraries like Beautiful Soup (Python) or Cheerio (Node.js) offer higher-level abstractions, simplifying the process of navigating and extracting data from HTML.

Machine learning models, particularly those based on natural language processing, can be employed for more complex scenarios, handling unstructured lists and nuanced data formats more effectively. For instance, a recurrent neural network (RNN) could be trained to identify list items even if they are not consistently formatted.

Limitations and Error Handling

List crawlers face limitations including website structure changes, anti-scraping mechanisms, and data inconsistencies. Robust error handling is crucial. This includes implementing mechanisms to handle HTTP errors (e.g., 404 Not Found), timeouts, and exceptions during parsing. Retrying failed requests with exponential backoff strategies can improve reliability. Data validation checks, such as type checking and range validation, can help detect and correct errors in the extracted data.

Employing a logging system to track errors and successes facilitates debugging and performance monitoring. For example, if a website changes its HTML structure, the crawler might encounter errors during parsing. A robust error-handling mechanism would log the error, attempt to recover (e.g., by using alternative parsing strategies), and continue processing other URLs.

Flowchart of the List Crawling Process

A flowchart would depict the following steps: 1. Initialization (Setting parameters, loading configuration). 2. URL Retrieval (Fetching URLs from a seed list or database). 3.

Web Page Fetching (Retrieving HTML content). 4. Data Parsing (Extracting list data using chosen techniques). 5. Data Cleaning (Handling inconsistencies, errors, and duplicates).

6. Data Validation (Ensuring data accuracy and integrity). 7. Data Storage (Persisting data to a database or file). 8.

Reporting (Generating logs and summaries). The flowchart would visually represent the flow of control between these steps, showing decision points (e.g., handling errors) and loops (e.g., iterating through URLs).

Data Cleaning and Validation

Data cleaning and validation involve several steps: 1. Data Deduplication (Removing duplicate entries). 2. Data Normalization (Converting data to a consistent format). 3.

Data Transformation (Modifying data types or structures). 4. Error Correction (Fixing inconsistencies or inaccuracies). 5. Data Validation (Verifying data against predefined rules or constraints).

For example, if the crawler extracts list items containing both numerical and textual data, the data cleaning process would normalize the numerical data to a consistent format (e.g., converting strings to integers) and standardize the text data (e.g., converting to lowercase).

For descriptions on additional topics like craigslist santa barbara, please visit the available craigslist santa barbara.

Implementation in Pseudocode

“`function crawlList(seedURL, maxDepth) queue = [seedURL]; visited = ; while (queue is not empty and depth < maxDepth) url = queue.dequeue(); if (url not in visited) visited[url] = true; html = fetch(url); listItems = parseList(html); storeData(listItems); newURLs = extractLinks(html); queue.enqueue(newURLs); function parseList(html) //Implementation using regular expressions or a library like Beautiful Soup function storeData(data) //Implementation for storing data in a database or file function extractLinks(html) //Implementation for extracting links from HTML ```

Legal and Ethical Considerations

Web scraping, including the use of list crawlers, operates within a complex legal and ethical landscape. Understanding the potential legal ramifications and ethical implications is crucial for responsible data collection and avoiding potential legal repercussions.

This section Artikels the key legal and ethical considerations surrounding the use of list crawlers, along with best practices to ensure compliance and ethical conduct.

Legal Implications of Unauthorized Scraping

Scraping lists from websites without explicit permission can lead to legal challenges. Website owners retain copyright over their content, including lists and data presented on their sites. Unauthorized access and copying of this data may constitute copyright infringement, a violation that can result in legal action, including cease-and-desist letters, lawsuits for damages, and even injunctions preventing further scraping activities.

The severity of the consequences depends on various factors, including the scale of the scraping operation, the nature of the data collected, and the specific terms of service of the targeted website. Terms of service often explicitly prohibit scraping, and violating these terms can provide grounds for legal action. Furthermore, some jurisdictions have specific laws regulating data collection and the use of automated tools like list crawlers, adding another layer of complexity.

Ethical Considerations of List Crawlers

Beyond the legal aspects, ethical considerations are paramount. Respecting the privacy of individuals whose data might be included in scraped lists is crucial. Even if the data is publicly accessible on a website, scraping and repurposing it without consent can raise ethical concerns, particularly if the data is used in ways that could be detrimental to the individuals involved.

Consider, for instance, scraping a list of email addresses and using them for unsolicited marketing or other intrusive purposes. Transparency is also vital. Ethical data collection practices involve being upfront about the data collection process and its intended use. Failing to be transparent can erode trust and damage the reputation of the individual or organization conducting the scraping.

Potential Risks of Unauthorized Data Collection

Unauthorized data collection using list crawlers carries several risks. Beyond the legal liabilities mentioned earlier, there’s the risk of reputational damage. Being discovered engaging in unauthorized scraping can severely damage an organization’s reputation, impacting its relationships with customers, partners, and the public. Technical risks also exist. Websites often implement measures to detect and block scraping activities, potentially leading to the disruption or failure of the scraping process.

Furthermore, aggressive scraping can overload a website’s server, causing service disruptions for legitimate users. Finally, there is the risk of collecting inaccurate or outdated data, leading to flawed analyses and poor decision-making.

Best Practices for Responsible Data Collection

Responsible data collection using list crawlers involves adhering to both legal and ethical guidelines. This includes obtaining explicit permission from website owners before scraping their data. Respecting robots.txt directives, which specify which parts of a website should not be accessed by automated tools, is also essential. Using polite scraping techniques, such as implementing delays between requests to avoid overloading the website’s server, demonstrates responsible behavior.

Furthermore, data collected should be used ethically and transparently, ensuring compliance with all relevant privacy regulations. Data minimization—collecting only the necessary data—is another important principle. Finally, always be prepared to handle potential legal challenges by documenting the data collection process and having a clear legal strategy in place.

Legal Frameworks and Their Implications for List Crawling

| Legal Framework | Jurisdiction | Key Implications for List Crawling | Enforcement Mechanisms |

|---|---|---|---|

| Copyright Act | Various (e.g., US, EU) | Prohibits unauthorized copying of copyrighted material, including website content. | Civil lawsuits, injunctions. |

| GDPR (General Data Protection Regulation) | European Union | Requires consent for processing personal data; restricts the collection of sensitive data. | Significant fines for non-compliance. |

| CCPA (California Consumer Privacy Act) | California, USA | Grants California residents rights regarding their personal data, including the right to know what data is collected. | Civil penalties. |

| Terms of Service | Website-Specific | Websites can set their own rules regarding data scraping; violating these rules can lead to legal action. | Cease-and-desist letters, account suspension. |

Applications of List Crawlers

List crawlers, by systematically extracting data from online lists, offer a powerful tool across numerous industries. Their ability to automate data gathering significantly reduces manual effort and accelerates data analysis, providing valuable insights for informed decision-making. However, it’s crucial to understand both their advantages and limitations in specific contexts.

E-commerce Applications

In the e-commerce sector, list crawlers can be used to monitor competitor pricing, track product availability, and gather customer reviews from various online marketplaces. For instance, a clothing retailer could use a list crawler to scrape product listings from competing websites, analyzing price points and inventory levels to inform their own pricing strategies and inventory management. This allows for dynamic pricing adjustments and proactive inventory control.

The benefits include improved competitiveness, optimized pricing, and enhanced inventory management. However, drawbacks include the potential for legal issues if terms of service are violated, and the need for ongoing maintenance to adapt to website changes. Compared to manual data collection, list crawlers offer significantly faster and more comprehensive data acquisition, resulting in more agile business decisions.

Research Applications

Academic researchers can leverage list crawlers to gather vast amounts of data from diverse online sources. For example, a researcher studying public opinion on a specific political issue could scrape data from online forums and social media platforms, analyzing sentiment and identifying key themes. This allows for a large-scale analysis of public discourse, providing a more comprehensive understanding of public sentiment than traditional survey methods.

The benefits are increased data volume and breadth of analysis, enabling researchers to identify trends and patterns that might be missed with smaller datasets. Drawbacks include potential biases in the data gathered from specific online platforms and the need for careful data cleaning and validation to ensure accuracy. Compared to manual data collection, list crawlers drastically reduce the time and effort required for data gathering, allowing researchers to focus on analysis and interpretation.

Use Case Scenario: Real Estate Market Analysis

A real estate investment firm wants to analyze property listings in a specific city to identify undervalued properties. A list crawler could be designed to scrape data from multiple real estate websites, collecting information such as property address, price, size, number of bedrooms and bathrooms, and property type. The crawler would then process this data, identifying properties that are significantly below the average market price for comparable properties.

This analysis could help the firm identify potentially lucrative investment opportunities. The crawler would need to be designed to handle variations in data format across different websites and incorporate mechanisms to detect and handle errors. The benefits include a quick and comprehensive market overview, enabling faster identification of investment opportunities. Drawbacks include the potential for inaccurate data due to inconsistencies in online listings and the need for sophisticated data analysis techniques to identify truly undervalued properties.

Potential Use Cases for List Crawlers

The following list Artikels potential applications of list crawlers across various fields:

- Price Comparison Websites: Automatically collecting and comparing prices from multiple e-commerce sites.

- Market Research: Gathering data on consumer preferences and trends from online reviews and forums.

- Job Search Engines: Scraping job listings from various company websites and job boards.

- News Aggregation: Collecting news headlines and articles from multiple news sources.

- Social Media Monitoring: Tracking brand mentions and sentiment analysis on social media platforms.

- Scientific Research: Gathering data from scientific publications and databases.

- Financial Analysis: Collecting financial data from company websites and financial news sources.

- Legal Research: Gathering legal precedents and case law from online legal databases.

Illustrative Examples

This section provides concrete examples of list crawling, showcasing the process, challenges, and outcomes in different scenarios. We will examine a real-world example involving e-commerce data extraction and then present a hypothetical project to further illustrate the versatility of list crawling techniques.

List crawling, while powerful, requires careful planning and execution. Understanding the target website’s structure, respecting robots.txt, and handling potential errors are crucial for successful data acquisition. Ethical considerations and legal compliance are paramount throughout the process.

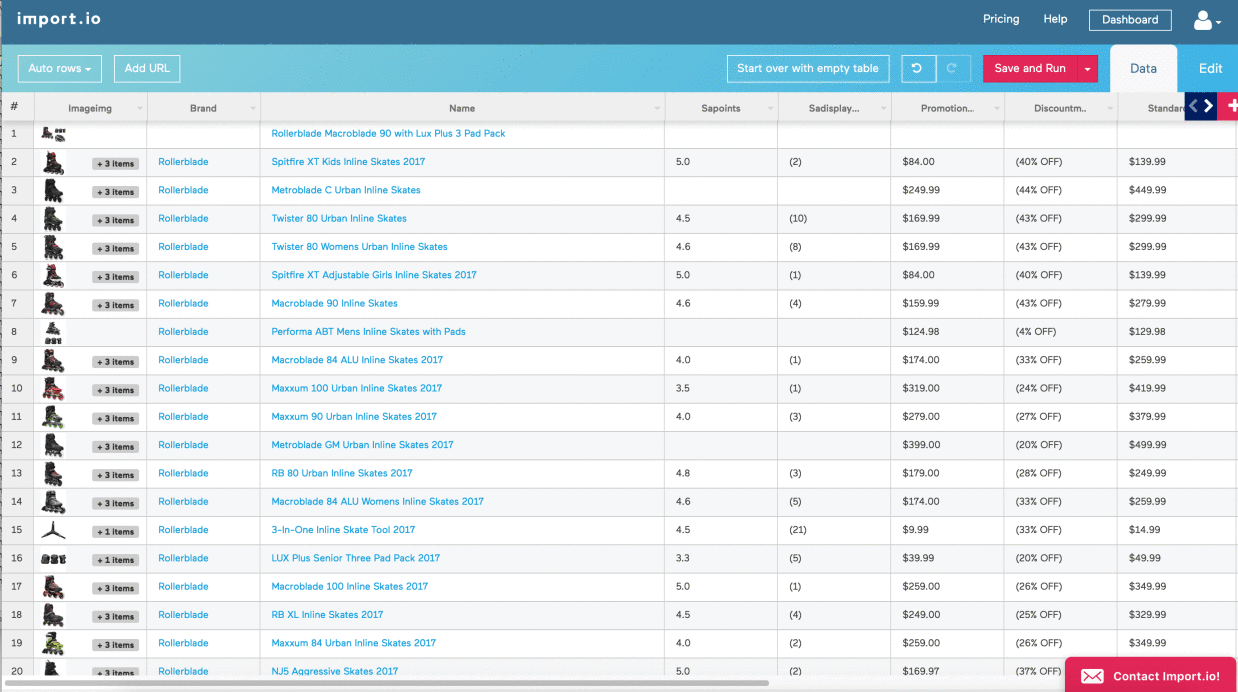

E-commerce Product Catalog Crawling

This example focuses on extracting product information from an online retailer’s website, specifically their product catalog. Imagine we are targeting a hypothetical online store selling handmade jewelry. The website organizes its products into categories (e.g., necklaces, earrings, bracelets) and subcategories (e.g., silver necklaces, gold earrings). Each product page contains details like the product name, description, price, image URLs, and customer reviews.

Our list crawler would start by identifying the URLs of the main product categories. It then navigates to each category page, extracts the URLs of individual product pages within that category, and adds them to a queue for processing. For each product page URL in the queue, the crawler fetches the page content, parses the HTML, and extracts the relevant data points using techniques like XPath or CSS selectors.

The extracted data is then stored in a structured format, such as a CSV file or a database.

Challenges encountered might include:

- Website Structure Variations: The website might have inconsistencies in its HTML structure across different product pages, requiring adjustments to the parsing logic.

- Dynamic Content: Some data, like product availability or pricing, might be loaded dynamically via JavaScript, requiring techniques like headless browser automation to access it.

- Rate Limiting: The website might implement rate limits to prevent abuse, requiring the crawler to incorporate delays and error handling mechanisms.

- Data Cleaning: Extracted data often requires cleaning and normalization to ensure consistency and accuracy before analysis or use.

Hypothetical List Crawling Project: Real Estate Listings

This hypothetical project aims to gather data on real estate listings from various online portals within a specific city. The goal is to analyze market trends, identify pricing patterns, and predict future price movements.

Methodology: The project would employ a multi-threaded list crawler to simultaneously access multiple real estate websites. Each website would be analyzed to identify the URLs of property listings. The crawler would then extract key data points such as property address, price, size, number of bedrooms and bathrooms, property type, and listing date. Data would be cleaned and stored in a database.

Advanced techniques like natural language processing could be used to analyze property descriptions and extract additional features.

Expected Results: The project would deliver a comprehensive dataset of real estate listings, enabling detailed market analysis. This analysis could reveal trends in property prices, identify areas with high demand, and predict future price fluctuations based on historical data and current market conditions. The data could also be used to create visualizations and reports for real estate investors or market researchers.

For example, a heatmap could visually represent price variations across different neighborhoods, highlighting areas with higher or lower property values. The data could also be used to create predictive models using machine learning techniques to forecast future price trends.

In conclusion, list crawlers present a powerful tool for data acquisition, offering significant benefits across various sectors. However, responsible implementation is paramount. Understanding the legal and ethical considerations, coupled with robust technical design, is crucial for maximizing the positive impact while mitigating potential risks. By adhering to best practices and employing responsible data collection methods, we can leverage the power of list crawlers to unlock valuable insights and drive informed decision-making.