List Vrawler, a powerful tool for web data extraction, offers efficient methods for gathering structured information from websites. This process, known as web scraping, allows users to collect data from various online sources, automating tasks that would otherwise require significant manual effort. Understanding the capabilities and limitations of list vrawler is crucial for harnessing its potential while adhering to ethical and legal guidelines.

This guide explores the intricacies of list vrawler, from its fundamental components and techniques to advanced strategies for handling dynamic content and avoiding detection. We will delve into the ethical considerations, data management strategies, and diverse applications of this technology, providing a comprehensive understanding of its potential and limitations. We will also examine various use cases, comparing list vrawler to manual data entry, and discussing its role in e-commerce and research.

Understanding “List Vrawler”

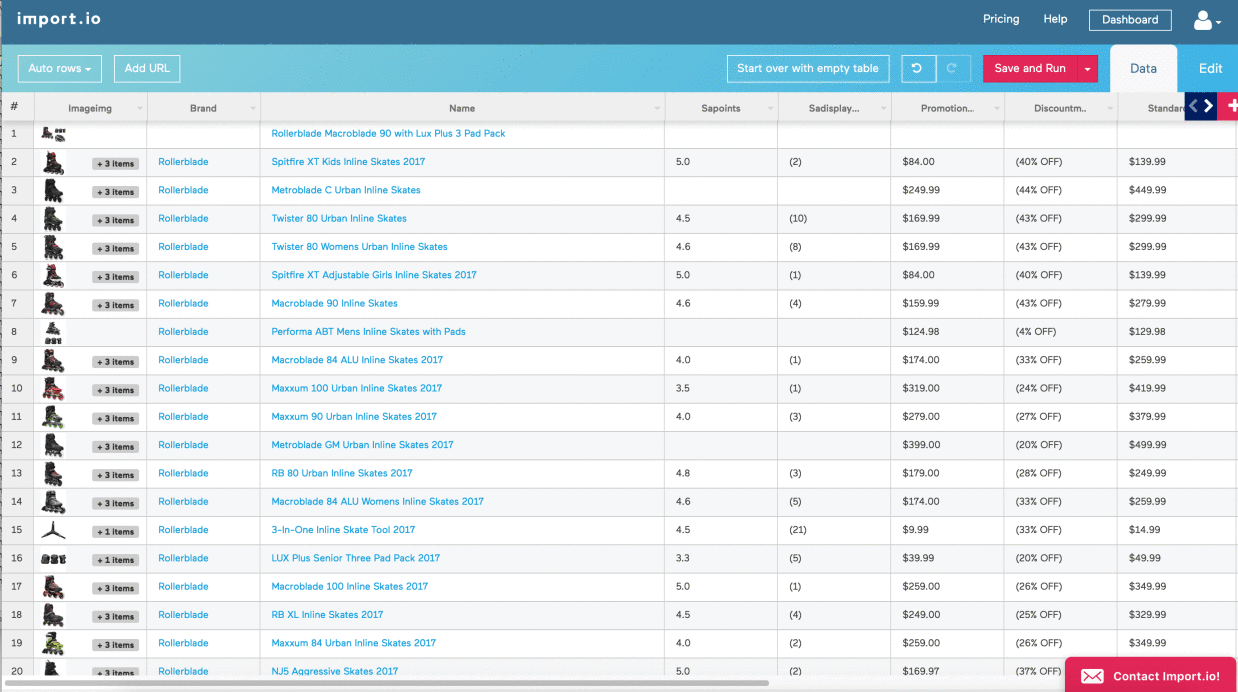

A list vrawler is a type of web scraping tool specifically designed to extract lists of data from websites. Unlike general-purpose web scrapers that might target various elements on a page, a list vrawler focuses on identifying and extracting structured lists, such as lists of products, articles, contact information, or other data organized in a tabular or list-like format.

This specialization allows for efficient extraction of large datasets from websites containing this type of structured information.List vrawlers employ various techniques to identify and extract lists, often utilizing regular expressions, CSS selectors, or XPath expressions to target the specific HTML elements containing the list data. The extracted data is then typically cleaned, formatted, and exported into a structured format such as a CSV file or a database for further analysis or use.

Types of Lists Targeted by List Vrawlers

List vrawlers can target a wide variety of list types found on websites. The specific type of list dictates the approach used for extraction. Some common examples include unordered lists (

- ), ordered lists (

- CSV (Comma Separated Values): A simple, widely supported format ideal for tabular data. Easy to import into spreadsheets and databases. Example: A list of names and email addresses could be stored with each line representing a record, separated by commas. “Name,Email”,”John Doe,[email protected]”,”Jane Smith,[email protected]”

- JSON (JavaScript Object Notation): A lightweight, human-readable format that’s particularly well-suited for structured data. Easy to parse and use in web applications. Example: “contacts”:[“name”:”John Doe”,”email”:”[email protected]”,”name”:”Jane Smith”,”email”:”[email protected]”]

- Databases (SQL, NoSQL): For large datasets or complex relationships, databases provide robust data management capabilities. SQL databases are relational, while NoSQL databases are more flexible for unstructured or semi-structured data.

- Data Validation Checks: Implement checks to ensure data conforms to expected formats and ranges. For instance, reject entries with invalid email addresses or phone numbers.

- Duplicate Removal: Identify and remove duplicate entries to avoid redundancy and improve data quality.

- Error Logging: Record errors and inconsistencies encountered during extraction and processing. This allows for identifying patterns and improving the extraction process.

- Data Transformation: Apply transformations to standardize data formats. For example, convert dates to a consistent format or standardize address formats.

- Handling Missing Values: Decide on a strategy for handling missing data. Options include imputation (filling in missing values based on other data), removal of incomplete records, or flagging missing values for later analysis.

- E-commerce Price Comparison: Automatically gathering pricing data from multiple online retailers to identify the best deals.

- Real Estate Market Analysis: Collecting property listings from various real estate websites to analyze market trends and identify investment opportunities.

- Financial Data Aggregation: Gathering stock prices, financial news, and economic indicators from different sources for investment analysis.

- Academic Research: Extracting data from research papers, journals, and online databases to support research projects.

- Lead Generation and Sales: Collecting contact information from online directories and social media platforms to build marketing lists.

- ), tables (

| Website Example | List Type Extracted | Potential Use Case | Ethical Considerations |

|---|---|---|---|

| Online Retail Store (e.g., Amazon) | Product listings (name, price, description) | Price comparison, market research | Respect robots.txt, avoid overloading the server, do not scrape copyrighted images |

| News Website (e.g., BBC News) | Article headlines and links | Sentiment analysis, trend identification | Respect robots.txt, cite sources appropriately, avoid scraping user comments |

| Real Estate Website (e.g., Zillow) | Property listings (address, price, features) | Real estate market analysis, property valuation | Respect robots.txt, avoid scraping personally identifiable information |

| Job Board Website (e.g., Indeed) | Job postings (title, company, description) | Job market analysis, recruitment | Respect robots.txt, avoid scraping applicant data |

Technical Aspects of List Vrawlers

Building a list vrawler involves a sophisticated interplay of several key components working in concert to efficiently extract and process data from web pages. Understanding these components is crucial for designing effective and robust vrawlers.

Core Components of a List Vrawler are responsible for the systematic retrieval, interpretation, and storage of data. These components work in a pipeline fashion, each stage building upon the output of the previous one.

Web Request Handling

The initial step involves sending HTTP requests to target web pages. This requires careful consideration of factors like request frequency to avoid overloading servers and implementing appropriate error handling to manage situations like network issues or server-side errors. Libraries like `requests` in Python or similar functionalities in other languages provide the necessary tools to make these requests efficiently and reliably.

Properly setting headers (such as `User-Agent`) is vital to mimic legitimate browser behavior and avoid being blocked by websites.

Data Parsing and Extraction

Once the web page content is retrieved, the next crucial step is to extract the relevant list data. Two prominent methods exist: regular expressions and DOM parsing. Regular expressions offer a powerful, albeit often complex, approach to pattern matching within the text content. They are useful for identifying lists based on specific delimiters or formatting patterns. However, regular expressions can become brittle when dealing with variations in website structure or inconsistent formatting.

DOM parsing, on the other hand, treats the HTML as a tree-like structure, allowing for more robust and adaptable list extraction. By navigating this structure, the vrawler can precisely target elements containing lists, such as `

- `, `

- `, or table elements, regardless of variations in surrounding text or formatting. Libraries such as Beautiful Soup in Python offer user-friendly interfaces for DOM parsing.

List Format Handling

Web pages represent lists in various formats. Unordered lists (`

- `) utilize bullet points, ordered lists (`

- `) use numbered sequences, and tables (`

| Task | List Vrawler Method | Manual Method | Comparison of Efficiency |

|---|---|---|---|

| Gathering pricing data for 10 products across 5 retailers | Automated data extraction; estimated time: 15-30 minutes | Manual data entry from each website; estimated time: 2-4 hours | List vrawler is significantly faster (5-10x), reducing time and human error. |

Benefits and Limitations of List Vrawlers for Research

List vrawlers offer substantial benefits for research by automating data collection from diverse online sources. This automation allows researchers to analyze larger datasets and identify patterns that might be missed through manual methods. For example, a researcher studying public opinion on a specific policy could use a list vrawler to collect and analyze comments from online forums and social media platforms.

However, limitations exist. The accuracy of the extracted data depends on the quality of the list vrawler and the structure of the source websites. Websites with dynamic content or complex layouts may pose challenges for accurate data extraction. Furthermore, ethical considerations surrounding data scraping and compliance with website terms of service must be carefully addressed. Over-reliance on automated data extraction without critical evaluation can lead to biased or inaccurate conclusions.

In conclusion, list vrawler represents a significant advancement in data extraction technology, offering powerful capabilities for automating the collection of structured data from the web. By understanding its technical aspects, ethical implications, and diverse applications, users can leverage its potential for increased efficiency and insightful analysis. However, responsible and ethical usage remains paramount, requiring careful consideration of legal restrictions and website terms of service.

Proper data handling and management are also crucial for ensuring the accuracy and usability of the extracted information.