Listceawler, a neologism hinting at a system for intelligently navigating and extracting data from lists, presents a fascinating area of exploration. This document delves into the potential meaning, functionality, applications, ethical considerations, and technical specifications of such a hypothetical system. We will explore its possible uses across various sectors and discuss the potential benefits and challenges it might present.

From conceptual design and functional aspects to ethical implications and technical details, we aim to provide a comprehensive overview of what a “listceawler” might entail. This exploration will consider potential data input and output methods, security frameworks, and suitable programming languages for its development. The potential impact and applications of this technology will be examined, along with comparisons to existing technologies.

Functional Aspects of a Hypothetical “Listceawler”

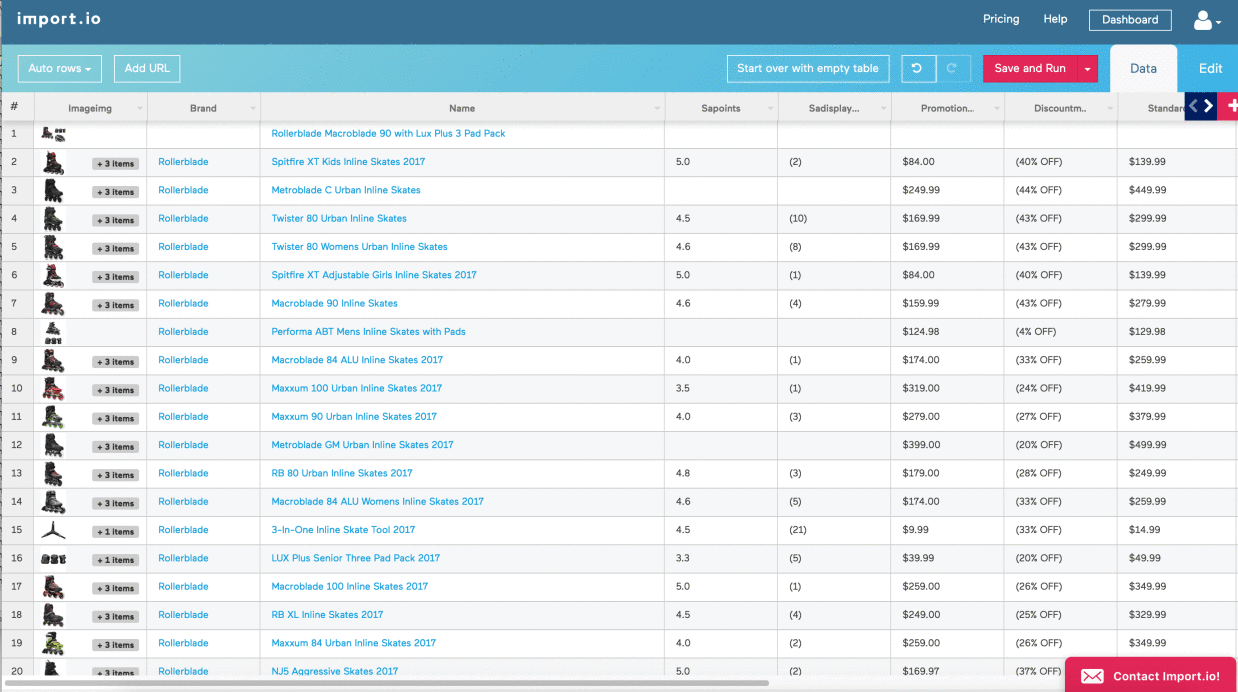

Listceawler is a conceptual system designed to efficiently extract and organize data from various online sources, primarily focusing on lists. Its core functionality lies in its ability to intelligently navigate websites, identify list-based content, and present this information in a structured and usable format. This system aims to automate the tedious process of manual data extraction from web pages containing lists, saving significant time and effort.

Conceptual Model of Listceawler

Listceawler operates on a modular design. The core components include a web crawler module responsible for navigating websites and identifying target pages, a list extraction module that parses HTML to identify and extract list data (ordered and unordered lists), a data cleaning module that handles inconsistencies and standardizes the extracted data, and a data output module that presents the extracted information in the desired format.

These modules interact seamlessly, allowing for a streamlined data extraction process. Error handling mechanisms are integrated throughout the system to manage issues such as broken links, unavailable pages, and malformed HTML.

Core Functionalities of Listceawler

The system’s core functionalities revolve around the automated extraction and organization of list data from websites. This includes the identification of various list types (bulleted, numbered, definition lists), extraction of list items, handling of nested lists, and the management of different data formats within the lists (text, numbers, links, etc.). Furthermore, Listceawler incorporates features to filter and refine extracted data based on user-defined criteria, allowing for targeted data retrieval.

For example, a user could specify s to filter list items or select specific attributes of list elements.

Data Input and Output Methods of Listceawler

Listceawler accepts website URLs as input, allowing users to specify the target sources for data extraction. The system utilizes standard HTTP requests to access web pages. Output methods are flexible, allowing users to choose between various formats such as CSV, JSON, or XML. This adaptability ensures compatibility with various data processing and analysis tools. The output data will include the extracted list items, along with metadata such as the source URL and the date of extraction.

For instance, a user might input the URL of a product listing page and receive a JSON file containing all the product names, prices, and descriptions.

Operational Flowchart of Listceawler

The operational flow can be visualized as follows: The system begins by receiving a target URL as input. The web crawler module then fetches the webpage’s HTML content. The list extraction module analyzes the HTML, identifying and extracting all list elements. The data cleaning module then processes the extracted data, handling any inconsistencies or errors. Finally, the data output module formats the cleaned data according to the user’s specified output format (e.g., CSV, JSON, XML) and provides the result.

Obtain access to ariana grande rule 34 to private resources that are additional.

Each step involves error handling to ensure robustness and reliability. A visual representation would show a sequential flow from input (URL) to output (formatted data), with feedback loops for error handling at each stage. The flowchart would use standard flowchart symbols (e.g., rectangles for processes, diamonds for decisions, parallelograms for input/output).

Ethical Considerations of “Listceawler”

The development and deployment of a “listceawler,” a hypothetical system for automated data collection from online lists, necessitates a careful consideration of ethical implications. The potential for misuse, particularly concerning privacy and data security, demands a proactive approach to responsible data handling and adherence to legal frameworks. This section will explore the key ethical, legal, and practical aspects of using a listceawler.

Privacy Concerns Associated with Listceawler Systems

The primary ethical concern surrounding listceawlers revolves around privacy. These systems, by their nature, collect data from various online sources, potentially including personally identifiable information (PII). This could encompass names, email addresses, phone numbers, and even location data, depending on the target websites. The unauthorized collection and subsequent use of such data raise serious privacy violations, particularly if the data is used without informed consent or for purposes beyond what was reasonably expected by the data subjects.

For example, a listceawler designed to scrape contact information from public business directories could inadvertently collect private information from individuals inadvertently included in those directories. This highlights the need for robust filtering mechanisms and strict adherence to data minimization principles.

Legal and Regulatory Aspects of Listceawler Development and Deployment

The legal landscape surrounding data collection varies considerably across jurisdictions. Developing and deploying a listceawler requires careful consideration of relevant laws, including data protection regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. These regulations Artikel strict rules regarding data collection, storage, processing, and usage, including requirements for obtaining explicit consent and providing transparent data privacy policies.

Failure to comply with these regulations can result in significant financial penalties and reputational damage. Furthermore, intellectual property rights must also be considered. Scraping copyrighted material without permission constitutes infringement and could lead to legal action.

Responsible Data Handling Practices to Mitigate Risks

Mitigating the risks associated with listceawlers requires a multi-faceted approach focused on responsible data handling. This includes implementing robust data anonymization techniques to remove or mask PII wherever possible. Data minimization principles should be strictly adhered to, ensuring that only the absolutely necessary data is collected. Transparent data usage policies must be established, clearly outlining how collected data will be used and stored, and providing individuals with mechanisms to access, correct, or delete their data.

Regular security audits and vulnerability assessments are crucial to identify and address potential weaknesses in the system that could lead to data breaches. Finally, a clear and accessible mechanism for individuals to opt out of data collection should be implemented. By proactively incorporating these responsible data handling practices, developers can significantly reduce the ethical and legal risks associated with listceawler technology.

Technical Specifications of a Hypothetical “Listceawler”

A robust and efficient Listceawler requires careful consideration of its technical underpinnings. This includes selecting appropriate programming languages, defining necessary hardware and software components, establishing a comprehensive security framework, and integrating relevant APIs and libraries. The following sections detail these crucial aspects.

Suitable Programming Languages

The choice of programming language significantly impacts the Listceawler’s development, performance, and maintainability. Several languages are well-suited for this task, each offering distinct advantages. Python, with its extensive libraries for web scraping and data manipulation (such as Beautiful Soup and Scrapy), is a popular choice for its readability and ease of use. Java, known for its robustness and scalability, is another strong contender, particularly for large-scale crawling projects.

Go, with its concurrency features, offers excellent performance for handling numerous simultaneous requests. Finally, Node.js, leveraging JavaScript’s asynchronous capabilities, provides a highly efficient environment for handling network-intensive operations. The optimal language selection depends on the specific requirements and priorities of the Listceawler project.

Hardware and Software Components, Listceawler

The Listceawler’s hardware requirements depend on the scale of the crawling operation. For smaller projects, a standard desktop computer with sufficient RAM and processing power may suffice. However, large-scale crawling necessitates more powerful hardware, potentially including multiple servers working in parallel, high-bandwidth internet connectivity, and robust storage solutions to handle the vast amounts of data collected. Software components include an operating system (e.g., Linux, Windows), a database system (e.g., MySQL, PostgreSQL) for storing crawled data, and the Listceawler application itself, along with any necessary libraries and dependencies.

A well-designed system architecture, potentially incorporating load balancing and distributed crawling techniques, is crucial for scalability and reliability.

Security Framework

Protecting the Listceawler from vulnerabilities is paramount. A robust security framework should incorporate several key elements. These include input validation to prevent injection attacks, secure storage of sensitive data (such as API keys and credentials) using encryption and access control mechanisms, regular security audits to identify and address potential weaknesses, and implementation of rate limiting to avoid overloading target websites.

Furthermore, the system should be designed to handle potential errors and exceptions gracefully, preventing crashes and data loss. Regular updates and patching are essential to address known vulnerabilities in the software and its dependencies. Employing a well-defined security policy and adhering to best practices for web scraping are crucial aspects of this framework.

APIs and Libraries

Integrating relevant APIs and libraries can significantly enhance the Listceawler’s functionality.

- Web scraping libraries: Beautiful Soup (Python), Cheerio (Node.js), Scrapy (Python) provide tools for parsing HTML and extracting data.

- HTTP clients: Requests (Python), Axios (JavaScript) facilitate making HTTP requests to target websites.

- Database drivers: These allow interaction with database systems for data storage and retrieval (e.g., MySQL Connector/Python, pg).

- Cloud storage services: AWS S3, Google Cloud Storage offer scalable storage solutions for large datasets.

- Proxy services: Rotating proxies can help circumvent IP-based blocking by websites.

- Natural Language Processing (NLP) libraries: NLTK, spaCy can be used for text analysis and data processing.

Illustrative Example of “Listceawler” in Action

This example demonstrates how a hypothetical “Listceawler” could be used by a university library to maintain an updated catalog of available research papers. The library receives numerous papers daily, often in various formats and from different sources. Manually updating the catalog is time-consuming and prone to errors. A “Listceawler” automates this process, improving efficiency and accuracy.

Scenario: Updating the University Library’s Research Paper Catalog

The library uses a “Listceawler” to automatically update its online catalog of research papers. The “Listceawler” is configured to crawl several departmental websites and online repositories where faculty and students regularly upload their work.

Step 1: Input Data Acquisition. The “Listceawler” begins by accessing the specified websites and repositories using pre-defined URLs. It then identifies and extracts relevant information, such as paper titles, authors, abstract summaries, s, file types (PDF, DOCX, etc.), and download links. This data is gathered in a structured format, such as XML or JSON.

Step 2: Data Cleaning and Transformation. The extracted data often contains inconsistencies and requires cleaning. The “Listceawler” uses pre-programmed rules to standardize the format of authors’ names, remove extra whitespace, and handle different date formats. It also employs natural language processing (NLP) techniques to extract key information from abstract summaries, such as research topics and methodologies. The cleaned data is then transformed into a format suitable for the library’s database.

Step 3: Data Validation and Deduplication. Before updating the catalog, the “Listceawler” verifies the integrity of the extracted data. It checks for missing information, inconsistencies, and duplicates. A sophisticated algorithm identifies potential duplicates based on title similarity and author names, and flags them for manual review by a librarian.

Step 4: Database Update. Once the data is validated and deduplicated, the “Listceawler” updates the library’s online catalog database. This involves adding new entries, updating existing entries with new information (e.g., a revised version of a paper), and removing entries for papers that have been deleted from the source websites.

Step 5: Output and Reporting. The “Listceawler” generates a report detailing the number of new papers added, updated papers, and any errors encountered during the process. This report provides valuable insights into the growth of the library’s research collection and helps identify areas for improvement in the data extraction and processing pipelines.

Data Flow Representation

The data flow can be visualized as follows:Source Websites/Repositories –> Data Extraction (XML/JSON) –> Data Cleaning & Transformation (Standardized Format) –> Data Validation & Deduplication (Cleaned Data, Duplicate Flags) –> Database Update (Updated Database) –> Reporting (Summary of Actions & Errors)

In conclusion, the hypothetical “listceawler” system offers intriguing possibilities for efficient data processing and extraction from lists. While its potential benefits are significant, careful consideration of ethical implications, data privacy, and robust security measures are paramount for responsible development and deployment. Further research and development in this area could unlock innovative solutions across various fields, but only with a strong commitment to ethical and responsible practices.