Listcrowler: Unlocking the power of data extraction, this comprehensive guide delves into the functionality, applications, and ethical considerations surrounding this powerful tool. We will explore its core mechanisms, data acquisition processes, and compare its capabilities with other similar technologies. This exploration will provide a balanced perspective, addressing both its immense potential and the inherent risks associated with its use.

From understanding its technical architecture and limitations to exploring its diverse applications across various industries, we aim to provide a clear and informative overview. We will examine real-world case studies, highlighting successful implementations and addressing potential ethical dilemmas. Furthermore, we’ll Artikel best practices for responsible usage and suggest future development directions for Listcrowler.

Understanding ListCrawler Functionality

ListCrawler is a powerful tool designed for efficient and automated data extraction from online lists. Its core functionality revolves around intelligently navigating website structures and extracting specific data points, offering a streamlined approach to web scraping. This detailed explanation will cover its mechanisms, data acquisition process, comparative analysis with similar tools, and a visual representation of its operational flow.ListCrawler’s core mechanisms are built upon a combination of web crawling and data parsing techniques.

It employs sophisticated algorithms to identify and traverse lists embedded within websites, regardless of their formatting or structure. This includes handling various HTML tags, CSS selectors, and JavaScript-rendered content. The extracted data is then cleaned and organized, ensuring data integrity and usability.

Data Acquisition Process

The data acquisition process in ListCrawler begins with specifying the target URL containing the list. The software then initiates a web crawl, systematically navigating through the website’s structure, identifying and extracting relevant HTML elements containing the desired data. This process uses a combination of techniques, including HTML parsing, CSS selectors, and regular expressions, to accurately pinpoint and extract the target information.

Once extracted, the data undergoes a cleaning process to remove unwanted characters, format inconsistencies, and standardize the data for further analysis or storage. Error handling mechanisms are incorporated to manage situations such as broken links, unavailable pages, or changes in website structure.

Comparison with Similar Tools

Compared to other web scraping tools, ListCrawler offers a unique combination of features. While tools like Scrapy offer a more general-purpose approach to web scraping, requiring more coding expertise, ListCrawler focuses specifically on list extraction, providing a user-friendly interface and simplified workflow. Unlike simpler browser extensions that may have limitations in handling complex website structures or large datasets, ListCrawler is designed to handle more robust scenarios.

Find out about how chica rule 34 can deliver the best answers for your issues.

Its strength lies in its ability to efficiently extract data from dynamically generated lists, often a challenge for less sophisticated tools. This makes it particularly suitable for tasks requiring the extraction of large volumes of data from various online sources.

Operational Flowchart

The operational flow of ListCrawler can be visualized as follows:[Description of Flowchart: The flowchart begins with the user inputting a target URL. This is followed by a process box representing the web page download and parsing. A decision diamond then checks for the presence of lists on the page. If lists are found, a process box representing list identification and data extraction is executed.

If not, an error message is displayed. The extracted data then passes through a data cleaning and formatting process box. Finally, the cleaned data is outputted, either to a file or a database. Error handling is incorporated throughout the process, with feedback loops to manage issues such as network errors or website changes.]

ListCrawler’s Applications and Use Cases

ListCrawler, with its powerful web scraping capabilities, finds application across a wide spectrum of industries and tasks. Its ability to efficiently extract structured data from websites makes it an invaluable tool for businesses seeking to automate data collection and analysis processes. The versatility of ListCrawler allows it to adapt to diverse data sources and formats, significantly enhancing productivity and efficiency.

The following sections will explore specific examples of ListCrawler’s successful implementation across various sectors, highlighting the benefits and demonstrating its adaptability.

Industries Utilizing ListCrawler

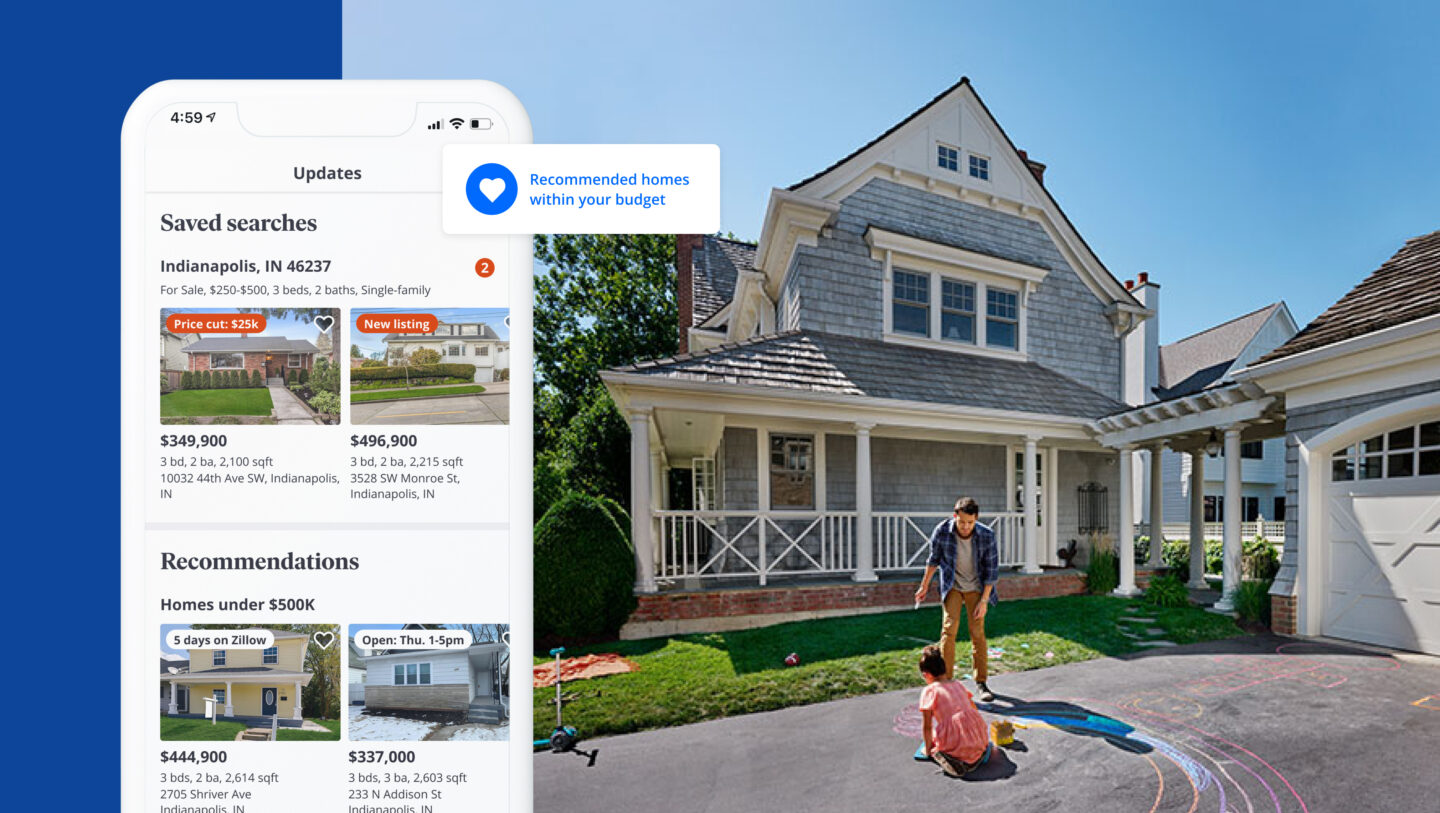

ListCrawler’s adaptability makes it a valuable asset in numerous sectors. For instance, e-commerce businesses leverage ListCrawler to monitor competitor pricing, track product availability, and gather customer reviews. Real estate companies use it to compile property listings from various online portals, while market research firms employ it to collect data on consumer trends and preferences from diverse online sources.

Financial institutions use ListCrawler for data aggregation and analysis, supporting risk assessment and investment decisions. Finally, the academic and research communities utilize it to gather vast amounts of publicly available data for analysis and publication.

Case Studies of Successful ListCrawler Implementations

Consider a large e-commerce retailer using ListCrawler to monitor competitor pricing on a daily basis. By automatically collecting pricing data from multiple competitor websites, the retailer can dynamically adjust its own pricing strategy to remain competitive, maximizing profit margins. This automated process replaces the manual, time-consuming task of individual price checks, resulting in significant cost savings and increased efficiency.

Another example involves a real estate company using ListCrawler to aggregate property listings from various online platforms. This consolidated data streamlines the process of identifying suitable properties for clients, enhancing the efficiency of their operations and improving customer service.

Benefits of Using ListCrawler in Different Scenarios

The benefits of using ListCrawler extend across several areas. In terms of cost savings, automating data collection eliminates the need for manual data entry, reducing labor costs and increasing efficiency. Data accuracy is improved through automation, minimizing human error inherent in manual data collection. Time savings are significant, as ListCrawler can collect data much faster than manual methods, freeing up valuable time for analysis and decision-making.

Finally, enhanced decision-making is enabled by providing access to comprehensive and timely data, allowing businesses to make more informed strategic choices.

Comparison of ListCrawler’s Suitability for Various Tasks

| Task | Suitability | Benefits | Limitations |

|---|---|---|---|

| Price Monitoring | High | Automated, real-time data; competitive advantage | Website structure changes may require adjustments |

| Lead Generation | Medium | Efficient contact information gathering | Data quality depends on source website accuracy |

| Market Research | High | Large-scale data collection; trend identification | Requires careful data cleaning and analysis |

| Real Estate Data Aggregation | High | Consolidated property listings; improved client service | Dealing with dynamic website structures |

Ethical Considerations and Potential Risks

ListCrawler, while a powerful tool for data acquisition, presents significant ethical and legal considerations. Its ability to automate the collection of vast amounts of data necessitates a careful approach to ensure responsible and legal usage. Misuse can lead to serious consequences for both the user and the targeted websites.

Potential Misuse and Ethical Implications

The automated nature of ListCrawler makes it susceptible to misuse. For example, scraping personal data without consent violates privacy regulations and ethical standards. Aggressive scraping can overload target servers, leading to denial-of-service (DoS) attacks, impacting the availability of the website for legitimate users. Furthermore, scraping copyrighted material without permission infringes intellectual property rights. Using ListCrawler to collect data for malicious purposes, such as creating spam email lists or facilitating phishing campaigns, is unethical and illegal.

The potential for harm extends to reputational damage for organizations whose data is scraped without authorization.

Potential Legal Ramifications

The legal implications of using ListCrawler depend heavily on the specific context and target website’s terms of service. Many websites explicitly prohibit scraping, and violating these terms can lead to legal action. Depending on the type of data scraped, violations of privacy laws like GDPR (in Europe) or CCPA (in California) could result in significant fines. Copyright infringement, as mentioned above, is another serious legal concern.

Furthermore, the use of ListCrawler in conjunction with other illegal activities, such as fraud or identity theft, will lead to severe legal penalties. Understanding and adhering to relevant legislation is crucial for responsible ListCrawler usage.

Best Practices for Responsible ListCrawler Implementation

Responsible use of ListCrawler requires a proactive approach to mitigating potential risks. Prior to implementing ListCrawler, it’s essential to thoroughly review the target website’s robots.txt file to understand its scraping policies. Respecting the `robots.txt` directives is crucial for ethical and legal compliance. Always implement delays between requests to avoid overloading servers and adhere to the website’s rate limits.

Obtain explicit consent whenever scraping personal data. Clearly identify yourself and your purpose when contacting website owners regarding data scraping activities. Only scrape publicly accessible data and avoid targeting sensitive information. Regularly review and update your scraping practices to align with evolving legal and ethical standards.

Measures to Mitigate Risks Associated with Data Scraping

Several measures can effectively mitigate the risks associated with data scraping using ListCrawler. Employing a robust error handling mechanism can prevent unexpected crashes and data loss. Using a proxy server network can mask your IP address and distribute the load across multiple points, reducing the risk of being blocked. Implementing a polite scraping strategy, including respecting `robots.txt` and rate limits, demonstrates responsible data acquisition.

Regularly monitoring your scraping activity for anomalies and potential violations can prevent unintended consequences. Storing scraped data securely and complying with relevant data protection regulations are crucial for minimizing risks. Conduct thorough legal and ethical reviews before initiating any scraping project.

Technical Aspects and Limitations: Listcrowler

ListCrawler’s functionality relies on a sophisticated interplay of web scraping techniques, data parsing algorithms, and efficient data management strategies. Understanding its technical architecture is crucial for optimizing its use and anticipating its limitations.ListCrawler’s core functionality is built upon a modular design. This allows for flexibility and scalability. The system begins by utilizing a robust web crawler to navigate target websites, identifying and extracting relevant HTML elements containing list data.

These elements are then passed to a parsing engine that employs regular expressions and other techniques to isolate the desired information, converting unstructured data into a structured format (e.g., CSV, JSON). Finally, the extracted data is stored and managed using a database system, enabling efficient retrieval and analysis. The choice of database depends on the scale and complexity of the data.

ListCrawler Architecture

The architecture comprises three primary modules: the web crawler, the data parser, and the data storage module. The web crawler employs multi-threading to accelerate the process of traversing websites and identifying list elements. The data parser utilizes a combination of techniques including regular expressions, HTML parsing libraries, and potentially machine learning algorithms (depending on the complexity of the target website’s structure) to accurately extract the desired data.

The data storage module utilizes a database (such as PostgreSQL or MongoDB) to store and manage the extracted data, providing efficient retrieval capabilities. The system is designed to handle large-scale data extraction tasks.

Limitations and Constraints

Several factors can limit ListCrawler’s effectiveness. Website structure variations pose a significant challenge; changes in a target website’s HTML can render ListCrawler’s extraction rules ineffective, requiring updates to its configuration. Dynamically loaded content, often reliant on JavaScript, can also present difficulties, as ListCrawler might not initially capture this information. Furthermore, the complexity of the target website and the volume of data to be extracted can directly impact processing time and resource consumption.

Finally, anti-scraping measures implemented by websites, such as CAPTCHAs and IP blocking, can significantly hinder ListCrawler’s operation.

Performance Comparison

Compared to manual data extraction, ListCrawler offers significant advantages in terms of speed and scalability. Manual extraction is time-consuming and prone to human error, especially for large datasets. Alternative automated methods, such as using APIs (Application Programming Interfaces) when available, generally provide cleaner and more reliable data but often depend on the target website offering such APIs and can be restricted by rate limits.

Web scraping tools that lack ListCrawler’s advanced parsing capabilities may struggle with complex website structures. ListCrawler’s modular design and efficient algorithms allow it to outperform these simpler tools in terms of speed and accuracy for many complex scenarios.

Technical Requirements

Effective utilization of ListCrawler requires a suitable computing environment. A robust server with sufficient processing power, memory (RAM), and storage capacity is necessary, particularly for large-scale data extraction tasks. The specific hardware requirements will vary depending on the complexity of the target websites and the volume of data being processed. Furthermore, network connectivity is critical; a stable and high-bandwidth internet connection is essential for efficient web crawling.

Finally, familiarity with basic command-line interface (CLI) operations or a user-friendly interface (if provided) is beneficial for configuring and managing ListCrawler.

Future Developments and Improvements

ListCrawler, while currently a powerful tool, possesses significant potential for enhancement and expansion. Future development should focus on increasing its efficiency, broadening its applications, and improving its user experience. This will involve addressing current limitations and exploring innovative ways to leverage the underlying technology.The following sections detail potential improvements and future directions for ListCrawler, focusing on specific features and applications.

These suggestions are based on current technological advancements and anticipated user needs.

Enhanced Data Processing and Filtering

Improving ListCrawler’s data processing capabilities is crucial for handling larger datasets and more complex extraction tasks. This involves optimizing algorithms for speed and efficiency, implementing advanced filtering options, and enhancing error handling. For example, the system could be improved to handle variations in website structures more effectively, automatically adapting to changes in target websites’ HTML layouts. This would reduce the need for manual adjustments and increase the robustness of the crawler.

Additionally, incorporating machine learning techniques could allow ListCrawler to intelligently identify and prioritize relevant information, reducing processing time and improving accuracy.

Integration with External Services and APIs

Integrating ListCrawler with other services and APIs will significantly expand its functionality and usability. This could include direct integration with data analysis platforms, allowing for seamless data processing and visualization. Integration with cloud storage services would simplify data management and storage. Furthermore, connecting ListCrawler with natural language processing (NLP) tools could enable the extraction and analysis of semantic information from web pages, going beyond simple matching.

An example of this would be automatically summarizing extracted text from multiple sources.

Improved User Interface and User Experience, Listcrowler

A more intuitive and user-friendly interface is essential for broader adoption. This includes simplifying the configuration process, providing better visual feedback during the crawling process, and offering more granular control over data extraction parameters. The implementation of a visual workflow editor, allowing users to graphically design their crawling tasks, would significantly improve ease of use. Furthermore, incorporating detailed logging and reporting features would aid in troubleshooting and performance analysis.

Feature Roadmap

A phased approach to development is essential for a successful enhancement strategy. The following roadmap Artikels key improvements, prioritizing user needs and technological feasibility.

- Phase 1 (Short-Term): Enhance data filtering capabilities, improve error handling, and refine the user interface for greater ease of use.

- Phase 2 (Mid-Term): Integrate with popular data analysis platforms and cloud storage services. Implement basic NLP capabilities for semantic analysis.

- Phase 3 (Long-Term): Develop a visual workflow editor, incorporate advanced machine learning algorithms for intelligent data extraction, and explore integration with other specialized APIs (e.g., sentiment analysis).

This roadmap provides a structured approach to development, allowing for iterative improvements and continuous enhancement of ListCrawler’s capabilities. The prioritization of features ensures a focused development process, delivering tangible benefits to users in a timely manner.

Innovative Applications

ListCrawler’s technology could be applied to a wide range of innovative applications beyond simple web scraping. For instance, it could be used to monitor online brand mentions, providing real-time insights into customer sentiment and brand perception. Another potential application is in the field of market research, allowing for the automated collection and analysis of competitor pricing data or product reviews.

Furthermore, ListCrawler could be adapted to track changes in legislation or regulations, providing valuable information for compliance officers. These examples highlight the potential of ListCrawler to become a versatile tool across numerous industries.

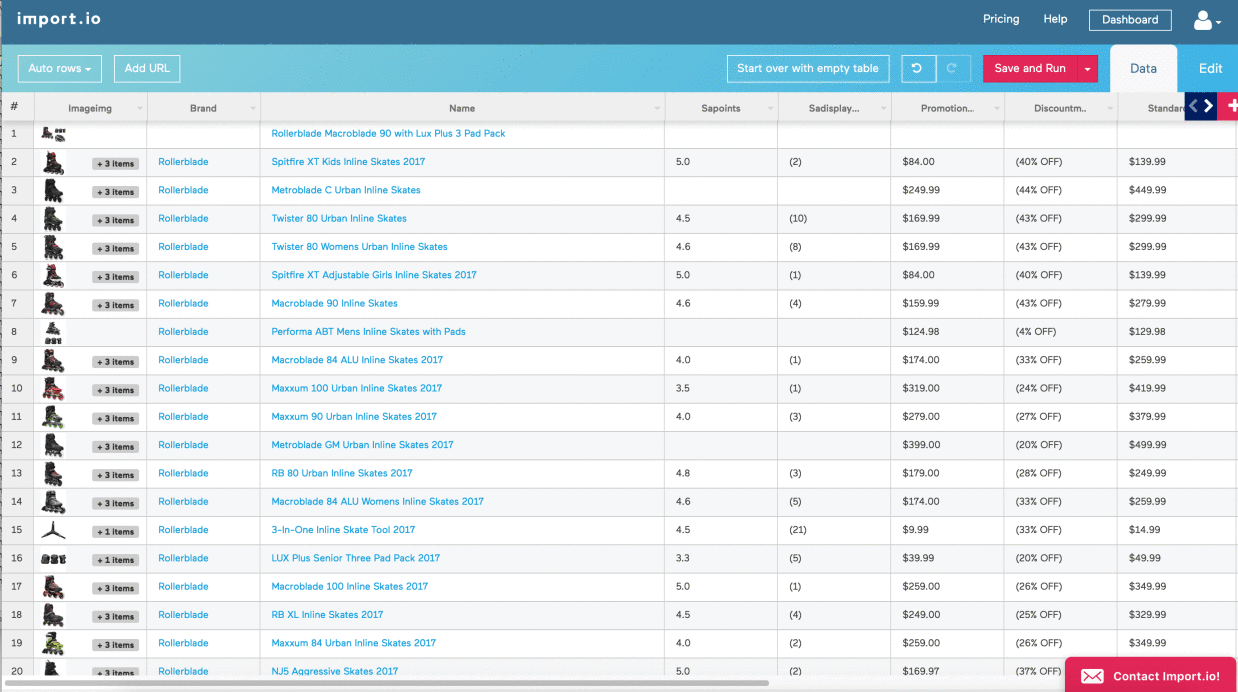

Illustrative Examples of ListCrawler Output

ListCrawler’s power lies in its ability to extract structured data from websites. This section provides concrete examples demonstrating the type of output generated and how this data can be visualized for analysis and decision-making. We will illustrate data extraction from a hypothetical product catalog, showcasing the versatility of ListCrawler.

Example of Product Catalog Data Extraction

Imagine ListCrawler is used to scrape data from an online retailer’s website selling electronics. The software successfully extracts information on several products. The extracted data includes product name, price, and a short description. This data, in its raw form, is then processed and organized for easier understanding and subsequent analysis.

Structured Data Output Example

The following unordered list shows a sample of the structured data extracted from the hypothetical electronics retailer’s website, representing the output format from ListCrawler. Each list item represents a product, containing its key attributes. This structured format facilitates easy import into spreadsheets or databases for further processing and analysis.

- Product Name: “High-Definition 4K Smart TV – 65 Inch” Price: $1299.99 Description: “Experience stunning visuals and smart features with this 65-inch 4K Smart TV. Enjoy vibrant colors and incredible detail.”

- Product Name: “Wireless Noise-Cancelling Headphones” Price: $249.99 Description: “Immerse yourself in your audio with these comfortable and high-performing noise-cancelling headphones.”

- Product Name: “High-Performance Gaming Laptop” Price: $1999.99 Description: “Power through demanding games with this high-performance gaming laptop, featuring a powerful processor and dedicated graphics card.”

- Product Name: “Smart Home Security System” Price: $399.99 Description: “Protect your home with this comprehensive smart home security system, including cameras, sensors, and a central control panel.”

Example Visualization: Bar Chart of Product Prices

A bar chart could effectively visualize the price distribution of the extracted products. The x-axis would represent the product names, while the y-axis would display their respective prices. This simple visualization quickly allows for comparison of product pricing, identifying potential price outliers or patterns within the product catalog. For instance, a longer bar would represent a higher-priced product, allowing for a quick visual comparison across all products in the dataset.

This type of chart is particularly useful for identifying price trends and making pricing decisions.

In conclusion, Listcrowler represents a powerful tool with significant potential for streamlining data acquisition across numerous sectors. However, responsible and ethical use is paramount. By understanding its capabilities, limitations, and potential risks, users can harness its power effectively while mitigating potential negative consequences. This guide serves as a starting point for informed decision-making and responsible implementation of Listcrowler technology.